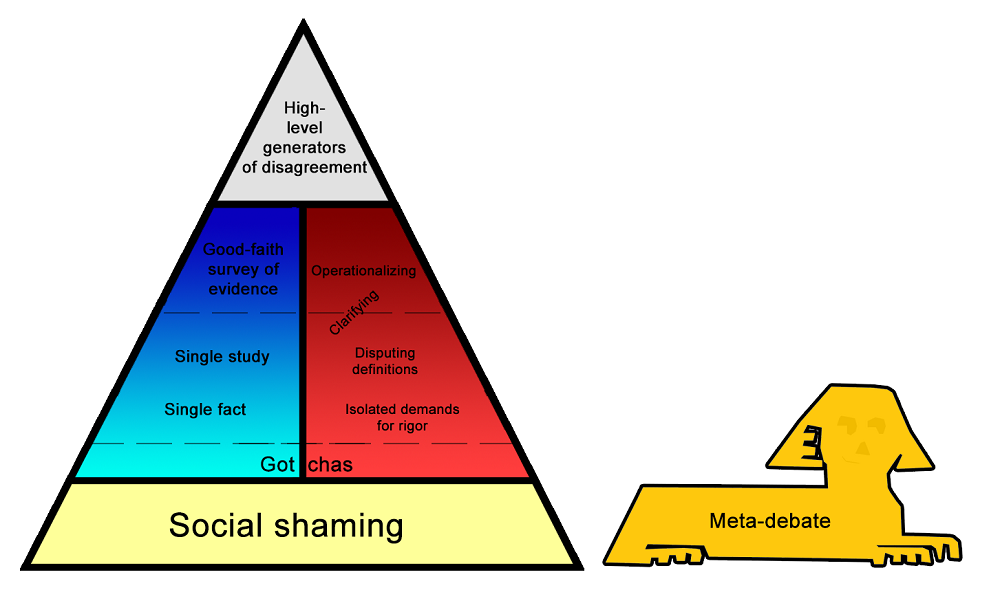

He offers this pyramid:

He writes,

If you’re intelligent, decent, and philosophically sophisticated, you can avoid everything below the higher dotted line. Everything below that is either a show or some form of mistake; everything above it is impossible to avoid no matter how great you are.

I would emphasize this point. Don’t bother responding against arguments below the higher dotted line. And certainly don’t endorse such arguments.

I think of Russ Roberts, who often is trying to argue above the higher dotted line and gets hit by people who instead argue below the lower dotted line.

This is the link:

http://slatestarcodex.com/2018/05/08/varieties-of-argumentative-experience/

You can avoid it on your own and ignore it by others, but you won’t necessarily be able to avoid it or its consequences by others. While responding may be counterproductive, silence can be acquiescent, and all need measure.

I avoid websites (like Crooked Timber for example) because of this. There is so much below the line attacks to anyone not supporting the tribal allegiance that there is no point in participating at all. Thus the only way to really avoid it is to avoid the site altogether. Pity, as I wish I could have more open dialogue with the far left.

Thanks for having such a lot of civil discussion here. Not sure there’s been so much disagreement, tho. It would be good to see some disagreements here, highlighted. It’s true that there is often disagreement by Arnold about some point by another author, but that’s not quite disagreement here.

The Intel Dark Web folk are, by and large, trying to have civil, rational, respectful disagreements. It’s also fine to be here and have more discussion without so much disagreement.

If you point out that someone’s argument is a hasty generalization based on a single study, are you engaging in a gotcha? Should you ignore it and not engage?

If they are seeking out a single study to support some “core principle” then saying that study is wrong won’t do much, because the core principle remains and they will just find another study.

Either you have to change someones core principles (very hard) or appeal to some deeper core principal which would override a lessor one if new evidence was presented. Let’s say someone believes the following:

1) The most important thing is X

2) Y creates X

3) Study Z confirms subset Y creates X

If you attack Z without attacking #2 they will likely find some other study.

If you attack #2 you might get better progress because #2 is just subject to #1, it is a means not an end. Of course this would require a lot of evidence, you would have to prove that its not just a problem of a random study but a systematic problem with X. For instance, the inability to set efficient prices is a feature of communism and is due to the problem of centralized planning in a world of decentralized information.

However, if all this just goes back to #1 its probably a waste of time to debate #3. Let’s say their #1 is “equality of outcome” and yours is “equality of opportunity”. You might be able to convince someone that the USSR was bad an achieving “equality of outcome”(#2), but that doesn’t mean you could agree on what should take its place.

If you think their #3 just points back to a #1 which is irreconcilable with your #1 then discussion is likely a waste of time. That’s when power politics begin, since you can only have one #1. I think a certain amount of #3 is just people who have already figured out differing #1 means inevitable conflict, even if that knowledge is unconscious.

A key question is whether an argument is being offered in good faith. In my experience, that can be tested in two ways, and failure to engage with these tests is a good sign one is not dealing with a reasonable, rational, good faith partner.

1. Epistemic Tipping Point: What kind of evidence would change your mind? Or, in a kind of ‘pre-registration’, what is a reasonable standard to evaluate future evidence, before you know what that data looks like?

One sees this in court cases all the time. “We only heard one person’s testimony, but she didn’t seem very credible. I think the accused is innocent.” – “What would it take to change your mind?” – “Well, another person’s corroborating testimony would get me to the 50/50 point, and throw in some DNA matching and video and I’ll definitely change my mind to guilty.”

So, to get back to the Ezra Klein and Sam Harris fracas, Harris should said to Klein, “Look, the day Murray predicted decades ago is now just around the corner. We are going to get some solid data from huge Genome-Wide Association Studies, and those results are going to speak directly to these questions. And my question to you is, what would it take to convince you that my claims are correct and not “scientific racism” (Yglesias’ smear), when the multivariate regression analysis spits out the results? p of what? r-squared of what?”

If the person can’t answer, then they are revealing themselves to not engaging in good faith discussion, and being unreasonable, the definition of which is an unwillingness to change one’s mind no matter what new information becomes available, or how strong it is. When people talk about people making politics into a religion, this is the kind of thing they mean, stubborn positions that don’t need evidence, that they won’t allow to be contingent on, or vulnerable to, new information.

2. Is vs. Ought test: Related to the above, there is a species of bad faith argument which pretends that a question isn’t one of a conflict of different and irreconcilable fundamental values, but instead can be resolved by reference to pragmatic and objective considerations.

So, for example, many people argue against the death penalty by claiming it’s too expensive to pursue. There’s a sense in which that’s a reasonable claim, but, more commonly, a sense in which it’s just being offered as a smokescreen to cover up the real reasons.

The question to ask is, “Ok, if we kept making it cheaper and cheaper, at what point would you be cool with capital punishment.” I’ve never heard anyone say, “$100,000 and under in total legal costs. Then it’s fine by me.” Cost has nothing to do with what the heart of the dispute is really about. It’s a “fight them over there, so we don’t have to fight them over here” strategy, that defends against having to engage on ground that is harder to defend in objective terms.

I’ve never liked the Murray’s argument about genomic sequencing changing the debate. We already have enough evidence really. We’ve had enough since the Bell Curve. It’s pretty obvious the general ballpark conclusions aren’t going to change.

What are we really going to end up finding out? Whether heredity is 0.7 versus 0.8 or something? His opponents know they already lost the argument on evidence and it didn’t change anything, what could new data possibly bring to the battlefield.

The question is the tenability of the current counter-arguments, and the availability of any new ones.

Over time, it does in fact become embarrassing to repeat old false arguments, even on this matter, where everybody is willing to bend over backwards to support any claim that confirms their ideological and normative hopes.

After Edwards tore apart the faulty logic, people did indeed gradually abandon Lewontin’s fallacy, because it made them seem dumb and behind the times. Same thing goes for Lewis’ demonstration of Stephen Gould’s frauds against Morton: no one serious brings him up anymore, because they know they’ll look foolish.

Eventually the remaining arguments become fewer and more tenuous, like a last, desperate defense of a doomed enterprise. That’s the situation we’re in the today, where most of the hired gun dishonest pseudo-experts, e.g., Turkheimer and Nisbet, are painted into a corner and so have to double-down on the last possible arguable claim.

Specifically, they have to rely on the claim that we don’t know of any genes for intelligence, and so can’t exclude the possibility that every population group has an equal distribution of the genes that matter, and that what appears to be a ‘heritable’ statistical disparity, is merely perpetuated disadvantaging from racism and discrimination (or colonization), etc.

The trouble is, we’re going to find the genes. We’re already finding a few of the most important of them, and things are now at a point where it’s only a matter of time before we find the rest, and also are able to infer the zero-load version of every gene. And, just like we are now able to predict certain features like height with good accuracy and precision from genes alone, so too will we have that for cognitive traits.

The problem for the slimy Turkheimers of the world is that it will be hard to simultaneously be up to date and in the know on all the latest GWAS insights and the different distributions of genes and features by population group, and to also argue that we need to carve out a special exemption for intelligence because … why?

I’m not saying that he won’t still try – certainly he will – but eventually he’s going to go the way of Lewontin and Gould, especially as influence diminishes in a global context.

Do people need arguments?

In a world where you had genetic sequencing, would James Damore not be fired by Google?

I don’t really care if blank slate academics look more and more ridiculous in debates nobody cares about. They already look ridiculous enough. What matters is when regular people will be able to say “X is true, therefore Y is unjust” and not get their lives destroyed. I don’t think genetic sequencing is going to add much ammunition to that debate that we don’t already have.

If you point out that someone’s argument is a hasty generalization based on a single study, are you engaging in a gotcha?

No, Scott has in mind a different type of argument. (The chart isn’t very helpful without his explanations.)

1. Is there any particular example that comes your mind of a case in which someone did that to Russ Roberts?

2. The trouble with “good faith reviews of the evidence” is that these tend to get bogged down in trial-like “battles of the experts” and questions about whether the available evidence is trustworthy and reliable. The replication crisis is a euphemism for the problem that the current system of evidence generation is very vulnerable to compromise and no longer deserves reverential deference, especially in any case of active political or ideological controversy. Peer review turns out to be fool’s gold instead of a gold standard.

3. Ezra Klein recently did a podcast with Sam Harris after Harris complained that Klein was complicit in defaming him as a racist for having Charles Murray on his show. I strongly recommend reading the transcript for many examples of slimy argumentation on Klein’s part which seem high on the pyramid but in fact are all quite low. What lets Klein get away with that is the life-ruining consequences of truthful PC violation, with leaves Harris having to fight with his hands tied behind his back. Commitment to strong norms of argumentation alone cannot produce productive public debate when one of the participants is backed by a mob ready to strike, and it’s important to not let the appearance of debate give the false impression that all reasonable points of view have adequate voice and opportunity for fair hearing.

People sometimes disagreements over definitions to avoid dealing with facts that don’t support their point. However, I think it isn’t really possible to have productive discussion unless people are using terms that mean the same thing.

One person’s “high level generator of disagreement” is someone else’s _____.

A recent book related to these issues of argument, fact finding, and persuasion is by Peter H. Schuck. Recently published (2017) by Princeton University Press.

_One nation undecided: clear thinking about five hard issues that divide us_.

He lists ten typical attributes of what he calls “hard problems.” It’s a long and dense book, but readable. “Not to be read with an apple in one hand and a martini in the other,” as my friend Chester likes to say.